Networking Principles and Nvidia’s Innovations in Hyperscale and AI Workloads

At The Next Platform, our networking philosophy is straightforward: for hyperscale networking in distributed, non-coherent applications, the mantra is “Route when you can, switch if you must.” However, for latency and bandwidth-sensitive workloads like HPC and AI, we adhere to the opposite: “Switch when you can, route if you must.” When it comes to cabling, the rule is: “Copper when you can, fiber when you must.”

This principle is vividly demonstrated in Nvidia’s GB200 NVL72 rackscale system, which leverages over 5,000 copper cables to connect its 36 MGX server nodes, each equipped with two “Blackwell” B200 GPUs and a “Grace” CG100 Arm processor. The system uses NVSwitch 4 interconnects to create a unified CPU and GPU memory fabric, with NVLink 5 SerDes operating at 224 Gb/sec. While copper is sufficient for intra-rack communication, the increasing heat density and bandwidth demands are pushing Nvidia toward liquid cooling and, eventually, co-packaged optics (CPO) for GPUs and CPUs.

The Case for Co-Packaged Optics (CPO)

As bandwidth demands grow, copper’s limitations become more apparent. Doubling bandwidth on copper wires halves the effective wire length due to signal degradation. With Nvidia’s next-generation “Rubin” GPUs and NVLink 6, the need for CPO becomes critical to maintain performance and reduce heat. CPO integration on GPUs and CPUs, such as the future “Vera” CPUs, will likely become essential to support larger NUMA domains for AI inference workloads.

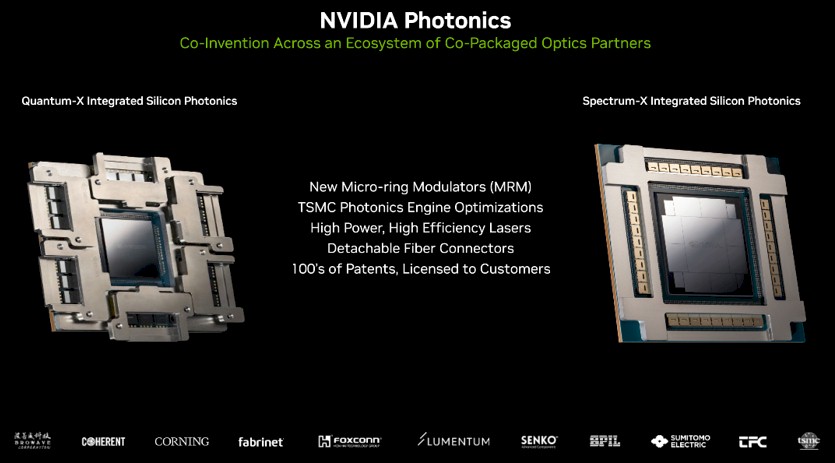

At Nvidia’s GPU Technical Conference 2025, CEO Jensen Huang unveiled plans to adopt silicon photonics and CPO for its Quantum InfiniBand and Spectrum Ethernet switches. This move is expected to significantly reduce power consumption in datacenter-scale AI systems, where optical transceivers currently account for a substantial portion of both cost and energy use.

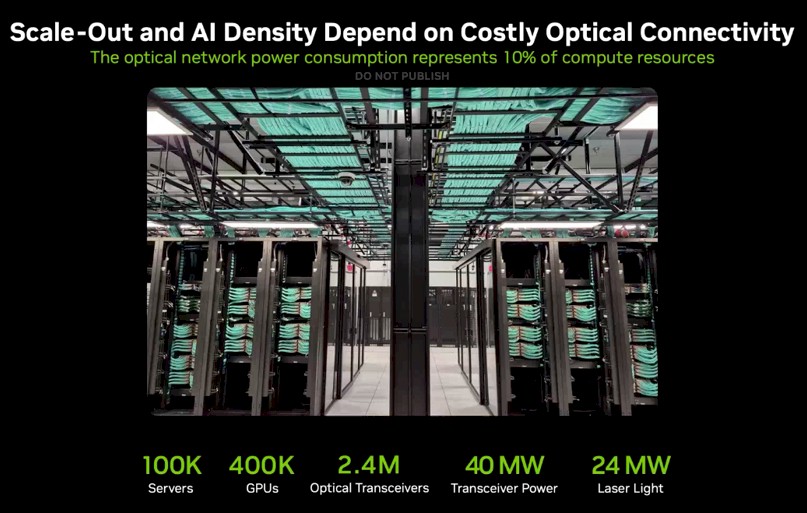

Power and Cost Challenges in Datacenter Networking

Optical transceivers are a major expense in datacenter clusters, often constituting 75-80% of network costs. In a hypothetical datacenter with 100,000 servers and 400,000 GPUs, 2.4 million optical transceivers consume 40 megawatts of power, with lasers alone accounting for 24 megawatts. By contrast, traditional hyperscale datacenters use far fewer transceivers, as CPU-based servers require fewer ports than GPU-heavy AI systems.

Nvidia’s shift to CPO aims to address these challenges. By integrating optics directly into switch ASICs, Nvidia can reduce power consumption by 3.3X and signal noise by 5.5X. This innovation will enable denser GPU configurations within the same power envelope, with fewer lasers required for interconnects.

Nvidia’s CPO Switch Designs

Nvidia is developing three CPO-based switches:

- Quantum 3450-LD: A 144-port InfiniBand switch with 115 Tb/sec aggregate bandwidth, available in late 2025.

- Spectrum SN6810: A 128-port Ethernet switch with 102.4 Tb/sec bandwidth, expected in late 2026.

- Spectrum SN6800: A high-capacity Ethernet switch with 512 ports and 409.6 Tb/sec bandwidth, also slated for late 2026.

These switches will use silicon photonics engines and micro-ring modulators (MRMs) developed in collaboration with partners like TSMC. The integration of high-efficiency lasers and detachable fiber connectors will further enhance performance and reliability.

Future Implications

Nvidia’s adoption of CPO marks a significant step toward more efficient and scalable datacenter networking. By reducing power consumption, signal noise, and component density, CPO will enable the next generation of AI and HPC workloads. As hyperscalers and cloud builders increasingly adopt Ethernet for AI clusters, Nvidia’s Spectrum-X switches with CPO are poised to play a pivotal role in shaping the future of datacenter infrastructure.

In summary, Nvidia’s innovations in silicon photonics and CPO represent a transformative shift in networking technology, addressing the dual challenges of power efficiency and scalability in AI-driven datacenters.