High-tech companies, especially those in the semiconductor and IT sectors, rely heavily on roadmaps to guide their product development and strategic planning. These roadmaps, whether shared publicly or privately with key investors and customers, are essential for de-risking technology adoption and ensuring long-term scalability. For enterprises investing millions of dollars per rack, understanding a vendor’s roadmap is critical to avoid hitting performance or capacity ceilings, which can stall critical applications.

Historically, companies like Oracle, Nvidia, and AMD have used roadmaps to signal their commitment to innovation and to reassure customers of their ability to deliver continuous improvements. For instance, Oracle’s five-year roadmap following its acquisition of Sun Microsystems, and Nvidia’s four-year roadmap during the early days of GPU-accelerated computing, were pivotal in building trust and setting expectations.

Nvidia’s Roadmap: A Strategic Blueprint for AI Dominance

Nvidia, a leader in AI training and inference, has a comprehensive roadmap that spans GPUs, CPUs, DPUs, and networking technologies. This roadmap is not just a technical plan but also a strategic tool to reinforce Nvidia’s position in the AI market, especially as hyperscalers and cloud builders develop their own AI accelerators and CPUs. By publicly sharing its roadmap, Nvidia aims to demonstrate its ability to deliver superior systems and maintain its competitive edge.

Key Components of Nvidia’s Roadmap

- GPUs and CPUs:

- Blackwell B300 (Blackwell Ultra): The latest GPU, designed for large-scale AI inference and training, features 288 GB of HBM3E memory (a 50% increase over the B100/B200) and delivers 15 petaflops of FP4 performance. The GB300 NVL72 system, expected in late 2025, will offer 1,100 petaflops of FP4 inference and 360 petaflops of FP8 training performance.

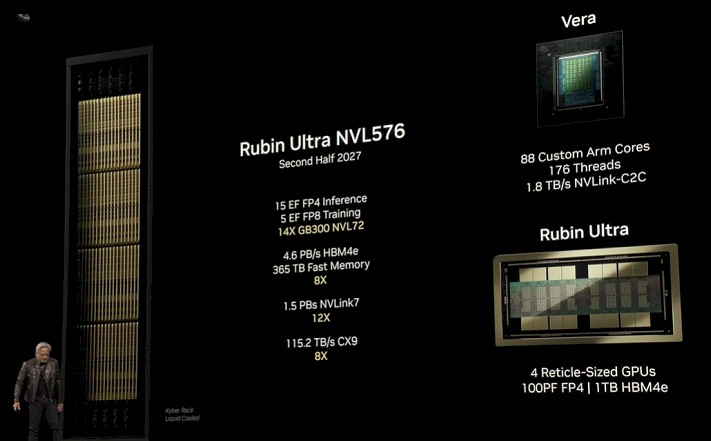

- Vera CV100 CPU: Slated for late 2026, this Arm-based processor will feature 88 cores with simultaneous multithreading, supporting 176 threads. It will double NVLink C2C bandwidth to 1.8 TB/sec, matching current GPU capabilities.

- Rubin R100 GPU: Also launching in late 2026, this GPU will feature 288 GB of HBM4 memory with a 62.5% bandwidth increase to 13 TB/sec. The Rubin-based VR300 NVL144 system will deliver 3.6 exaflops of FP4 inference and 1.2 exaflops of FP8 training performance, a 3.3X improvement over the GB300 NVL72.

- Networking and Interconnects:

- NVLink and NVSwitch: Nvidia continues to enhance its interconnect technologies, with NVLink 7 and NVSwitch 6 offering 3.6 TB/sec bandwidth by late 2026. These advancements are critical for scaling shared memory systems and improving AI workload performance.

- ConnectX SmartNICs: The ConnectX-8, launching later this year, will support 800 Gb/sec Ethernet and InfiniBand, doubling the bandwidth of its predecessor.

- Future Innovations:

- Rubin Ultra (R300): Expected in late 2027, this GPU will feature four reticle-limited chiplets in a single SXM8 socket, delivering 100 petaflops of FP4 performance and 1 TB of HBM4E memory. The VR300 NVL576 system will offer 15 exaflops of FP4 inference and 5 exaflops of FP8 training, a 21X improvement over current systems.

- Feynman GPUs: By 2028, Nvidia plans to double performance again with the Feynman generation, paired with Vera CPUs, 3.2 Tb/sec ConnectX-10 NICs, and 7.2 TB/sec NVSwitch 8 switches.

Strategic Implications of Nvidia’s Roadmap

Nvidia’s roadmap underscores its commitment to addressing the growing compute demands of AI, particularly for reasoning models and physical AI applications. These models, which require significantly more compute power than initially anticipated, are driving the need for continuous innovation in GPU and CPU architectures.

The roadmap also highlights Nvidia’s focus on memory and interconnect advancements, which are critical for scaling AI workloads. By doubling memory capacity and bandwidth with each generation, Nvidia ensures that its systems can handle increasingly complex AI models without hitting performance bottlenecks.

Nvidia’s roadmap is a testament to its leadership in the AI and HPC markets. By publicly sharing its plans, Nvidia not only reassures its customers and investors but also sets a high bar for competitors. As AI workloads continue to evolve, Nvidia’s commitment to innovation in GPUs, CPUs, and networking technologies will be pivotal in shaping the future of AI infrastructure. The company’s ability to deliver on its roadmap milestones will determine its success in maintaining its dominant position in the AI ecosystem.New chat